When AI Stops Flattering Us: Why Negative Feedback Feels Unfair

At Softtek, we're passionate about creating intelligent products that not only perform but resonate with the people who use them. Still, technology doesn’t just change workflows—it changes how people feel. And here’s the twist: when those judgments turn negative, people don’t just dislike the score—they distrust the system.

That reaction isn’t just anecdotal. In recent research co-authored by Ricardo Garza, Softtek’s VP of Innovation & Emerging Tech, and published in the Journal of Research in Interactive Marketing, the team explored how people emotionally respond to being evaluated by machines instead of humans — and what that means for organizations designing AI-driven experiences.

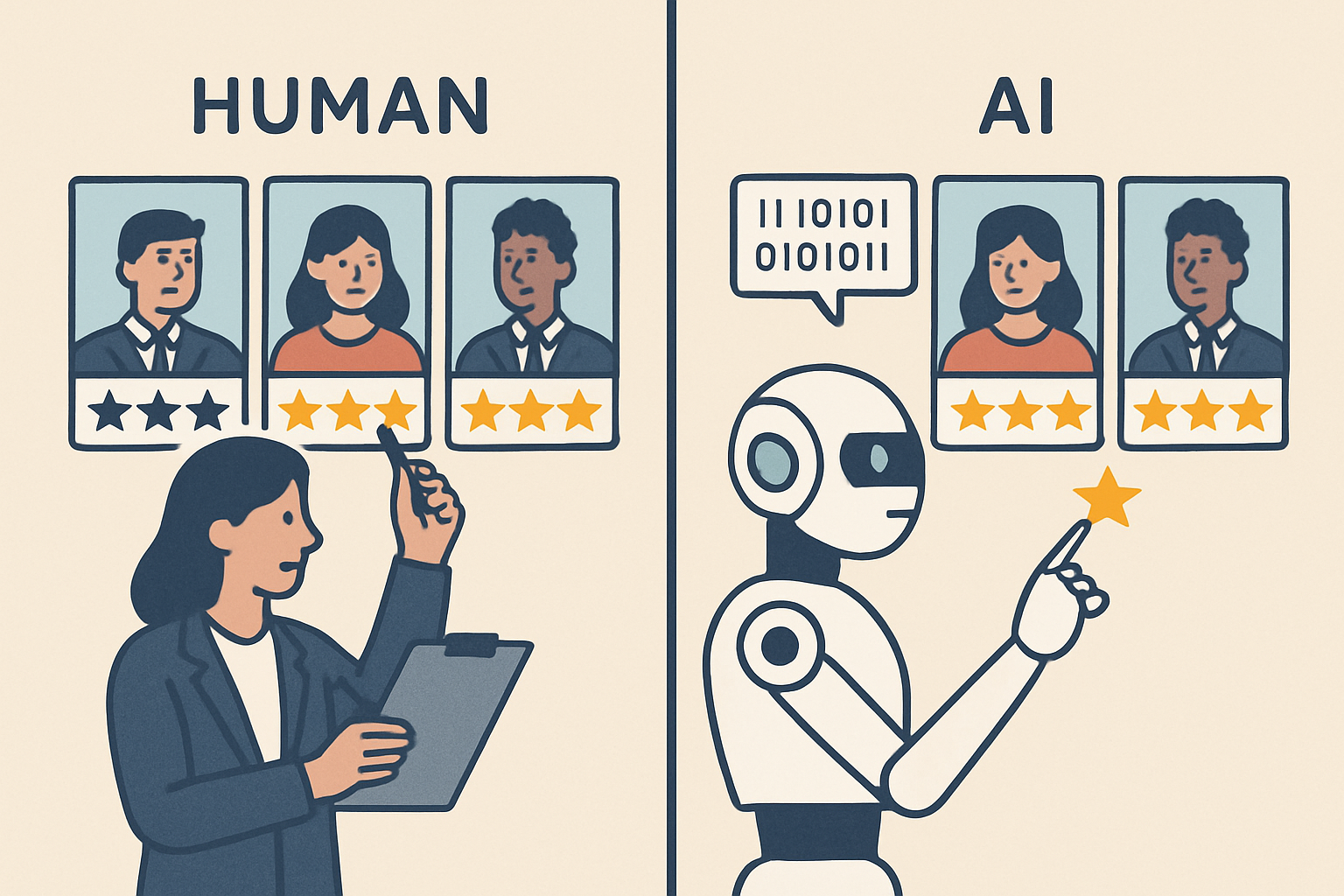

From grading student work and screening job candidates to everyday interactions like getting feedback or brainstorming ideas with AI tools, machine judgment is increasingly woven into daily life. As a result, users often come to expect AI flattery—overly polite, affirming feedback—which makes neutral or negative evaluations from machines feel colder, harsher, and less fair.

To find out whether that perception holds up, our innovation group teamed up with academic researchers for a series of lab experiments. Volunteers completed a simple task, then received a score - sometimes positive, sometimes neutral, sometimes negative - from either a human evaluator or our fictional AI, e-77. Then we asked: How fair did that evaluation feel?

The numbers tell the story

-

Positive feedback? No problem. Fairness ratings were virtually identical: 5.31 for humans vs. 5.26 for AI. Turns out, people embrace good news—no matter who delivers it.

-

Neutral feedback? Bias begins. Fairness dropped for AI (4.81) compared to humans (5.58). The robot’s shine starts to fade.

-

Negative feedback? Bias explodes. Fairness plunged to 4.21 when humans delivered bad news—but crumbled to 2.62 when AI did.

The research team replicated this pattern in a second study (negatives: 4.07 for humans vs. 2.71 for AI), so it’s no fluke: good news passes; bad news backfires.

Why do we judge robots so harshly?

The research, published in the Journal of Research in Interactive Marketing, uncovered what we call “transparency anxiety.” When a machine delivers a negative verdict, people get uneasy about the mysterious black box behind it. That anxiety drags down their sense of fairness. Positive results don’t provoke the same reaction—which is why the bias only shows up on the downside. (Apparently, we’re fine with robots as long as they’re our cheerleaders, not our critics.)

What it means for your business

Smarter systems aren’t just a technical challenge—they’re a human-experience challenge. If you’re rolling out chatbots, automated scoring, robo-interviewers, or recommendation engines, keep these design imperatives front and center:

- Build in transparency. Show the criteria, surface key data points, and offer a brief explanation alongside the score. An open box feels less threatening than a locked one.

- Soften the blow. Pair negative outcomes with empathetic phrasing, appeal options, and easy access to a human contact. (Think of it as the AI version of “Would you like to speak to a manager?”—no actual “Karens” required.)

- Humanize the interaction. Give virtual evaluators names and voices, use conversational language, and allow second opinions. The bias isn’t about capability—it’s about connection and control.

The bottom line

So why do harsh machine judgments feel unfair? Because when AI stops flattering and starts criticizing, the black box behind its decision-making triggers transparency anxiety—and that erodes trust.

Softtek’s role in this research underscores our commitment to responsible AI—not just building algorithms, but asking the hard questions about fairness, trust, and adoption. If you want your AI to be accepted, don’t just make it smart—make it transparent, empathetic, and a little more human.

Want the full story?

Download the complete report for all the stats and analysis. Learn more about what we’re doing in AI.

.jpg?width=352&name=91115%20(1).jpg)