Dimensionality Reduction in Machine Learning

Machine learning algorithms are typically able to extract important information from feature-rich datasets, whether they are tables with many columns and rows or images with millions of pixels. Couple this with breakthroughs in cloud computing, and the result is that larger and larger machine learning models can be run with great power.

However, each feature that is added increases the complexity of the executable, which makes locating information using these powerful algorithms also complicated. The solution is dimensionality reduction, which consists of employing a set of techniques to remove excessive and unneeded features from Machine Learning models.

Dimensionality reduction also severely reduces the cost of machine learning and allows complex problems to be solved with simple models.

This technique is especially useful in predictive models, as they are datasets that contain a large number of input features, and makes their function more complicated.

Therefore, the dimensionality reduction technique is defined as a way of converting a high dimensional dataset into a lower dimensional dataset, ensuring that the information it provides is similar in both cases. As mentioned, this technique is often used in machine learning to obtain a tighter predictive model while solving the regression and classification problems presented by the algorithms.

High dimensional data, speech recognition, data visualisation, noise reduction or signal processing, among others, are the main fields of application of dimensionality reduction.

Dimensionality problems

Going into detail on the dimensionality problems that appear in Machine Learning models, we must first know that these models are responsible for assigning features to results. For example, a predictive weather model has a dataset of information collected from different sources. These sources include data on temperature, humidity, wind speed. Bus tickets purchased, traffic and amount of rainfall from different times at the same location. As can be seen, not all data are relevant for weather forecasting.

Sometimes some features are not related to the target variable. Other features may be correlated with the target variable, but have no specific relationship to it. There may also be links between the feature and the target variable, but the effect is insignificant. In the above example, it is clear which characteristics are useful and which are not, but in other problems the difference may not be obvious and may require more data analysis.

Dimensionality reduction may not seem to make sense, because when too many features are present, a more complex model with more training data and more computational power will also be needed to train the model properly. However, models do not understand chance and try to assign any feature included in their dataset to the target variable, even if there is no chance relationship, generating erroneous models. By reducing the number of features, a simpler and more efficient model can be achieved.

Dimensionality reduction is responsible for identifying and removing features that decrease the performance of the machine learning model. In addition, there are several dimensionality techniques that will be discussed below, each of which is useful for certain situations.

How to reduce dimensionality?

Among the most basic dimensionality reduction methods, the most efficient is to identify and select a subset of characteristics that are highly relevant to the target variable. This technique is known as feature selection, and is particularly effective when dealing with tabular data where each column represents a specific type of information.

Feature selection mainly does two things: it keeps features that are highly related to the target variable and it contributes more to the variation of the dataset. For example, Python has a library, known as Scikit-learn, which has functions to analyse and select features suitable for Machine Learning models.

A common aspect is to use scatter plots and heat maps to visualise the covariance of different features, i.e., these tools are used to find out if two features are highly related to each other and if they will have a similar effect on the target variable, to determine that it is not necessary to include both in the model, eliminating one of them without negatively impacting performance. Similarly, variables that do not contribute information to the target variable are removed.

An example might be a data set of 25 columns that can be represented by only 7 of them, capable of representing 95% of the effect on the target variable. Thus, up to 18 functions can be eliminated, simplifying the machine learning model without losing efficiency.

Generally, when dimensionality reduction occurs, up to 15% of the variability in the original data is lost, but it brings with it advantages such as shorter training time, requires less computational resources and increases the overall performance of the algorithms. In addition, dimensionality reduction solves the problem of overfitting. When there are many features, models become more complex and tend to over-fit; thanks to reduction, this problem disappears. In turn, reduction takes care of multicollinearity, which occurs when an independent variable is highly correlated with one or more independent variables, so reduction combines these variables into a single set.

On the other hand, this technique is also very useful for factor analysis, an approach that deals with locating latent variables that are not directly measured in a single variable, but inferred from other variables in the dataset, where the latent variables are known as factors. It also eliminates noise in the data by keeping only the most important features and removing redundant ones, thus improving the accuracy of the model.

In the field of images, it is very useful for image compression, allowing the size in bytes to be minimised while maintaining much of the image quality. Basically, the pixels that make up an image are considered as variables of the image data. Using principal component analysis (PCA), an optimal number of components is maintained to balance variability and image quality.

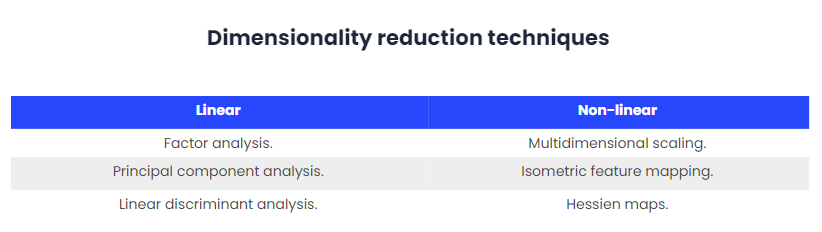

Finally, these techniques are differentiated between linear and non-linear, some of the most prominent of which are:

Benefits and disadvantages of dimensionality reduction techniques

Some of the main benefits of applying the dimensionality reduction technique are the following:

- Reducing the dimensions of the features implies a reduction in the space required to store the dataset, because the dataset is also reduced.

- The model training time is shorter for reduced dimensions.

- Faster data visualisation is facilitated by reducing the number of features in the dataset.

- Redundant features in the multicollinearity domain disappear.

Dimensionality reduction also has certain disadvantages which are mentioned below, although the advantages are greater:

- Some data may be lost due to dimensionality reduction.

- In the PCA dimensionality reduction technique, the principal components to be considered are sometimes not known.

Conclusions

In short, having too many features will result in an inefficient Machine Learning model. However, the ability to reduce features through dimensionality reduction is a tool that can be used to create more optimised and efficient models.

Dimensionality reduction can be applied to different fields such as high dimensional data, speech recognition, data visualisation, noise reduction or signal processing, among others. It can also be used to transform non-linear data into a linearly separable form.

The use of this technique brings significant benefits ranging from reducing the storage space of the dataset to eliminating redundant features, optimising model training time and facilitating data visualisation. However, it is a technique that requires knowledge and appropriate equipment to perform, as more data than necessary may be removed and an erroneous model may be generated.