Bringing exascale supercomputers to market

The University of Cambridge, Intel Corporation and Dell Technologies have been pursuing the development of high-performance computing (HPC) for some time now. Today, they continue to work together to bring exascale technologies to the world.

This type of supercomputing refers to computing systems with extremely high power. These are systems capable of solving very complex and demanding problems, requiring the processing of large amounts of data and information.

This technology is currently at the centre of many countries’ studies to advance technological progress and is a key strategic resource. From the United States to China, including Europe, they are trying to develop their own exascale systems.

Studies show that these systems are 1,000 times faster than current HPC systems, so they can perform a trillion calculations per second. In other words, exascale will provide a dramatic increase in computing power. The impressive number of computations that these systems can perform brings breakthroughs for pattern modelling, the evaluation of different drugs in case of pandemics or the design of new clean energy processes, for example.

However, this growth in software performance implies abrupt changes in hardware architectures, driven by the end of Dennard scaling and the slowdown of Moore’s law.

In the face of these major developments, the new perspectives of software ecosystems are critical in relation to development teams, as they need to be the right ones and have significant incentives to take advantage of new technological possibilities.

The most important aspect to consider is the software architecture and the opportunities for application modernisation. In other words, all these exascale-focused groups and projects must take into account that the key aspect is novel software supported by adequate hardware.

Therefore, obtaining effective performance on these new architectures requires further development in the area of refactoring and tuning. Also, for this type of computing, architectures hosted on multi-core CPUs and multiple GPUs are required, which means complex architectures. In turn, new highly concurrent algorithms need to be developed and coded, which is equal to creating new programming models with new abstraction layers, in order to obtain good performance.

In addition, with the presence of artificial intelligence (AI), major changes are being driven in traditional HPC software, so that new products are being adapted to support AI, Machine Learning and Data Analytics in general.

Why is exascale necessary?

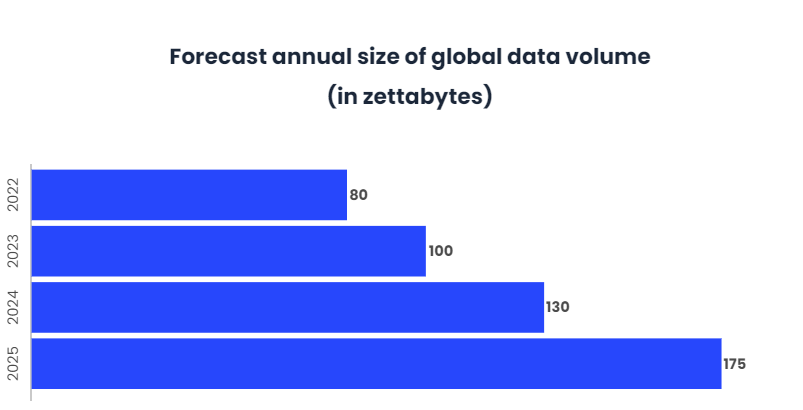

Massive data growth is driving new technologies to make intensive use of data. In addition, digital transformation is advancing by leaps and bounds, so the amount of HPC and artificial intelligence workloads is increasing exponentially every second.

In addition, advanced data analytics, IoT, simulations and different workloads related to data modelling give rise to a critical workflow for various businesses. This workflow therefore needs to be managed at a new level of scale and in real time: exascale.

But all these needs and new trends require a total redesign of computing architectures, software and data storage.

Benefits of exascale

The most outstanding benefit of scale is undoubtedly the large number of computational operations per second it can perform, which corresponds to at least one exaflop. As mentioned above, this type of computing allows us to model, simulate and analyse all the data around us, solving the most complex challenges that arise in the world we know today.

In addition, it brings with it an important advance in the storage and service of information, thanks to AI. It adds the ability to answer complex questions by arranging data with an interconnection designed to be flexible and comprehensive. It also involves powerful, cost-effective storage designed for heavy workloads.

This brings with it the next generation of applications for innovation in the field of AI and HPC software development, so that they can adapt to the changes and advances of digitisation.

Cambridge Open Exascale Lab projects

One of the key research groups in this technology is the Cambridge Open Exascale Lab, consisting mainly of five projects that define the initial approach to exascale:

- OneAPI

This is Intel’s scalable programming model, focused on heterogeneous systems to facilitate programming and code execution on diverse computer architectures. OneAPI is responsible for adapting existing applications to scale and preparing the code for deployment.

- Scientific OpenStack

The Scientific OpenStack project is responsible for creating an environment powerful enough to deploy, manage and use HPC systems. It also supports on-premises, cloud-based and hybrid deployments. This allows cluster deployments to run particular workflows while leveraging leading technologies on exascale platforms.

- High-performance Ethernet

A study on low latency Ethernet, comparing different vendors for HPC solutions, is being carried out, allowing a range of applications to be evaluated for different scales.

- Extreme scale display

Research is using different large-scale solid-state storage testbeds that, combined with processing engines and graphical software, allow exascale data sets to be represented in real time. These techniques will result in tools that are easy to use and implement.

- Exascale storage solutions

Research is also being done into the design from scratch of file systems implemented in storage devices, to focus on Intel’s open source DAOS object storage.

Thus, through these Cambridge Open Exascale Lab projects and others, carried out by different countries, the future of exascale is being built, taking on today’s big data management challenges. China is another major country dedicated to building such cutting-edge supercomputers.

Conclusions

Today, all teams focused on the development of exascale supercomputers are looking to improve a myriad of sectors for data analysis, optimisation and AI advancement.

Simultaneously, various exascale components are being developed, compatible for different versions and media, in order to integrate seamlessly and ensure the proper functioning of this new technology.

However, collaboration between different teams is essential to develop the right applications and software to implement these projects. The development and sustainability of the software is fundamental, so the different projects must have a well-defined development strategy. This underlines the essential role of software, which is the basis of this new technology.

Finally, these projects should seek to harness cognitive sciences to foster creativity and innovation in new exascale systems. The result is a technology with a more personalised and unique character, which is essential to work together with artificial intelligence.