Automation through Java JSOUP in a Spring Boot project

A few weeks ago, a colleague called me to ask if it’s possible to automate a task he carries out every day. The task consists of opening a website, filling in the required fields, then conducting a search. If the search returns results, a screenshot is taken and emailed to other colleagues.

Without seeing the web page, and only based on the brief conversation we had, I figured it would be a REST service using its respective filtering parameters, which could be seen in the browser’s own console. Ideally, this would be enough to invoke it and process the JSON response to determine whether there are results.

However, this was not the case. The form sent a POST request, informing the filters as form-data, and the response from the service was in HTML format, which may or may not include the search results in a table.

As a result, I had no choice but to look for a library to perform web scraping.

In this post, we will see how this automation task was addressed through the Java JSOUP library within a Spring Boot project, which will expose a single REST service with the same search filters as the form. Through this, we will be able to send the communication with the Spring implementation of JavaMailSender.

Although it doesn’t take a professional to work with this library, it’s recommended to have a basic notion of object orientation, as well as how to make calls through a REST client (such as Insomnia, Postman, Soap UI, …) and put breakpoints and debug in an IDE (such as Eclipse, IntelliJ, …).

But before all that, what is web scraping?

What is Scraping?

Scraping is a set of techniques to automatically obtain data from one or more web pages.

What is it useful for?

Some of the best-known uses for this information manipulation and analysis technique include:

- Collection and listing of information from different websites

- Search engine robots for analysis and classification of content

- Creation of alerts

- Monitoring of competitor processes

- Detection of changes on a website

- Analysis of broken links

- Etc.

It should be noted that in Spain (as well as in the USA and many other countries), web scraping is legal. However, laws can apply that involve administrative sanctions in cases of non-compliance with the General Data Protection Regulation (storage and treatment of third-party data without consent), or criminal sanctions for crimes against intellectual property. Scrape responsibly!

What libraries do we have at our disposal?

One of the most used programming languages for scraping is Python, but we have other libraries for the different languages that we use in our day to day, like:

- Python: Scrapy, BeautilShop

- Javascript: Osmosis, Apify SDK

- Java: JSOUP, Jaunt, Nutch

Initial analysis

Before addressing automation, it’s necessary to carry out an initial analysis of the site that we will be scraping, using web debugging tools such as Chrome DevTools (accessible using the F12 key once the Chrome browser is opened, or through its context menu: More Tools>Developer Tools).

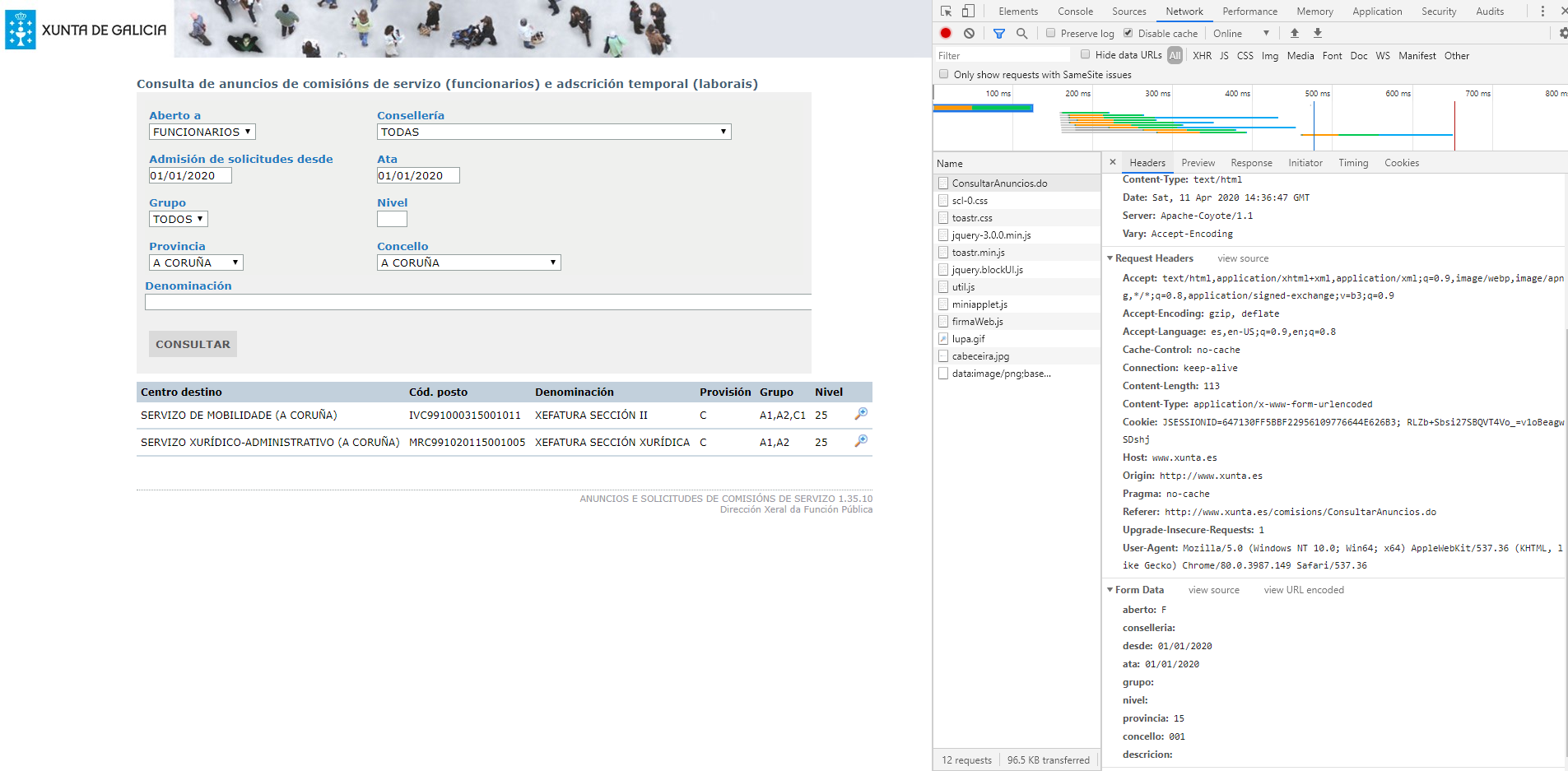

Once the query button is clicked, you can see all the requests that are launched from the network tab. Here you'll find all the information necessary to start coding the application logic:

- URL of the service

- Authentication type (if applicable)

- Search filters (nomenclature and format)

- Service response

Making different calls and varying the filters, we can see the following casuistry in the HTML response:

- If no results are produced, only one table is included (the one corresponding to the search form).

- If results are produced, two tables are displayed (a first one associated with the search form and a second one with the filtered ads).

- If an error occurs, only the form table and a warning are displayed, using the Toastr Javascript library.

Therefore, to cover the cases described above, the application must:

- Make a first call to the website to obtain cookies.

- Make the invocation to the service in order to inform the cookies obtained in the previous step, plus all the filters specified by the user.

- Check if an error has occurred using the status code and inspecting the Javascript + Toastr part.

- Analyze whether there is a second table within the results obtained, and if so, transform each row of that table and display the information in a DTO to subsequently manipulate and report more comfortably.

Configuration

To perform the task, we employed a Maven project generated from the Spring initializer of the website (start.spring.io) to which the JSOUP dependency in the POM was later added.

<dependency>

<groupId>org.jsoup</groupId>

<artifactId>jsoup</artifactId>

<version>1.13.1</version>

</dependency>

Key elements of the library

In the implemented code, we will see several of the key elements of JSOUP:

Connection: interface that provides simple methods to search for content on the web and parse them in Document.

Request: Represents an HTTP request.

Response: Represents an HTTP response.

Document: The base object of the library in which we can obtain the HTML code from the address we are inspecting.

Element: The minimum component within Document. From it, you can extract information, go through the hierarchy of nodes that compose it, and manipulate the HTML. The collection or plural of this element is known as Elements, an ArrayList <Element> extending class.

DataNode: The data node component for style content, script tags, etc.

Select(): One of the most important library methods and one that is implemented by Document, Element, and Elements. It supports both jquery and searching for CSS elements. Being that it’s a contextual method, we can filter by either selecting from a specific element or linking together select calls.

Request and obtain HTML

Using the following code, we see how to obtain cookies, fill in the attributes associated with the form and invoke the service, whose HTML format response will be available within the Document class.

// Realizamos petición a la página de la xunta para obtener las cookies

Connection.Response response = Jsoup

.connect("http://www.xunta.es/comisions")

.method(Connection.Method.GET)

.execute();

// Realizamos petición al servicio de consulta de anuncios

Document serviceResponse = Jsoup

.connect("http://www.xunta.es/comisions/ConsultarAnuncios.do")

.userAgent("Mozilla/5.0")

.timeout(10 * 1000)

.cookies(response.cookies())

.data("aberto", toString(criteria.getFinalUsers()))

.data("conselleria", toString(criteria.getCounselling()))

.data("desde", toString(formatDate(criteria.getStartDate())))

.data("ata", toString(formatDate(criteria.getEndDate())))

.data("grupo", toString(criteria.getGroup()))

.data("nivel", toString(criteria.getLevel()))

.data("provincia", toString(criteria.getProvinceCode()))

.data("concello", toString(criteria.getDistrict()))

.data("descricion", toString(criteria.getDescription()))

.post();

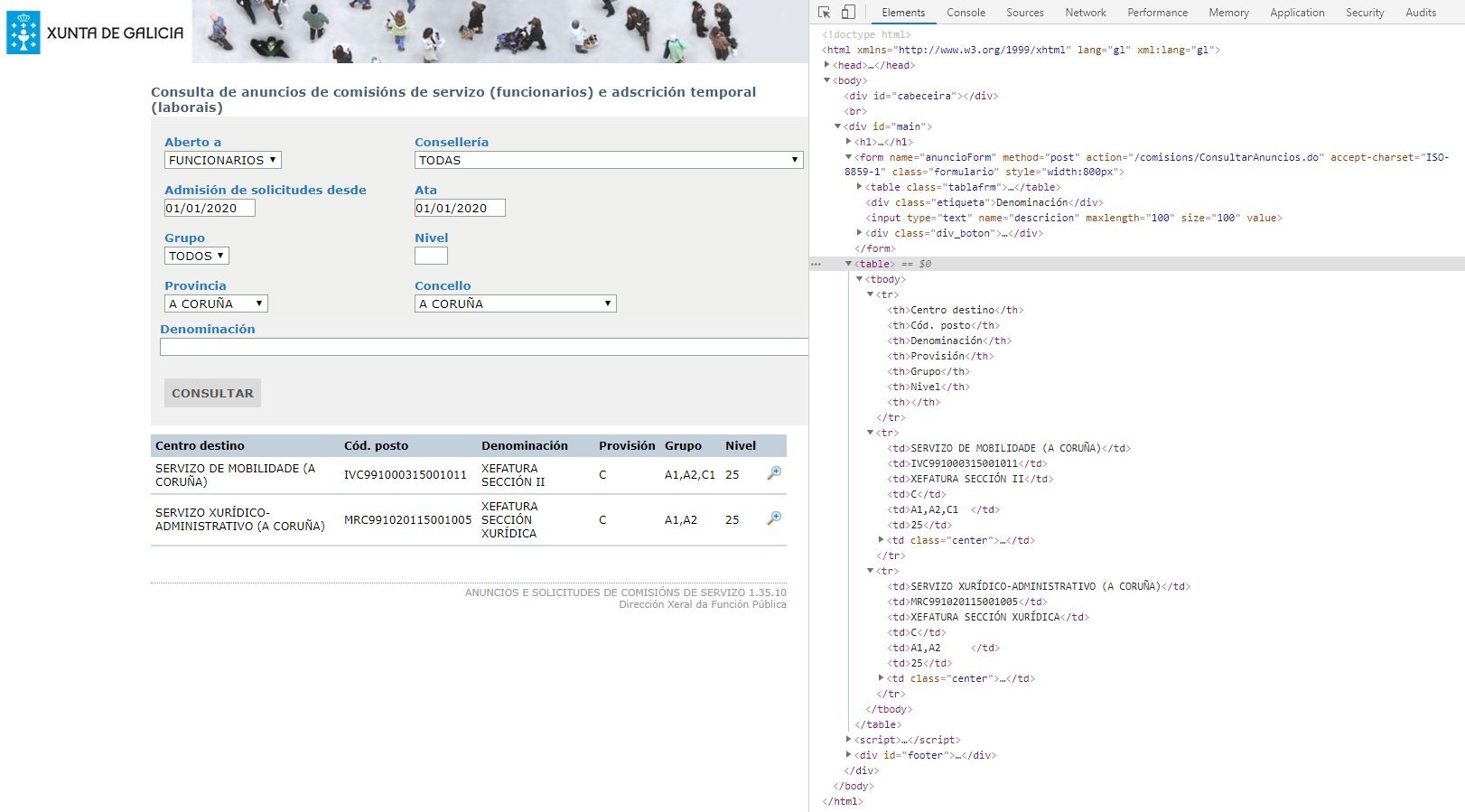

Analyzing the response

Once the service call is made, we can see through the returned HTML that there are not only results, but also a table, which corresponds to the different filters that make up the form. And if there are results that satisfy the search, a second table will be shown with the results.

Example:

To specify a selector that tells us if results are produced, simply filter by the <table> element and see if the total number of elements is greater than one.

To go through and obtain the different fields of each row, simply iterate by the number of elements that compose it.

// Procesamos la tabla de resultados: formulario + resultados.

Elements tables = serviceResponse.select("table");

if (tables.size() > 1) {

Elements rows = tables.get(1).select("tr");

// Eliminamos la última columna de la tabla (uris relativas).

for (int i = 1; i < rows.size(); i++) {

Elements values = rows.get(i).select("td");

String destinyCenter = values.get(0).text();

String jobCode = values.get(1).text();

String denomination = values.get(2).text();

String provision = values.get(3).text();

String group = values.get(4).text();

String level = values.get(5).text();

commissions.add(new CommissionResult(destinyCenter, jobCode, denomination, provision, group, level));

}

}

Automate REST service

For the automation task, I exposed a REST service in the code controller, which has an email address as a parameter for sending the results, as well as the same search filters that the website has.

You can consult all the information about the published service (entry, status codes, URL, etc.) in the following Github repository: https://github.com/hugogldrrl/java-jsoup

/**

* Fachada de consulta de anuncios de comisiones.

* @param recipient Destinatario para notificación de resultados.

* @param filters Filtros de búsqueda.

* @return Anuncios de comisiones.

* @throws MalformedURLException URL malformada.

* @throws HttpStatusException Respuesta no correcta y bandera de ignorar errores HTTP desactivada.

* @throws UnsupportedMimeTypeException Tipo de dato en respuesta no soportado.

* @throws SocketTimeoutException Tiempo de conexión agotado.

* @throws IOException Error desconocido.

* @throws MessagingException Error en el envío del correo de notificación.

*/

@GetMapping("commission")

public List getCommissions(@Email String recipient, @Valid CommissionCriteria filters)

throws MalformedURLException, HttpStatusException, UnsupportedMimeTypeException, SocketTimeoutException, IOException, MessagingException {

LOG.info("request started -> '/xunta/commission' -> recipient ({}), filters ({})", recipient, filters);

final List commissions = xuntaService.getCommissions(filters);

if (StringUtils.isNotEmpty(recipient) && commissions.size() > 0) {

MimeMessage msg = javaMailSender.createMimeMessage();

msg.setRecipient(Message.RecipientType.TO, new InternetAddress(recipient));

msg.setSubject("Xunta's Commission Results");

msg.setContent(xuntaService.createCommissionEmailContent(filters, commissions), "text/html;charset=UTF-8");

javaMailSender.send(msg);

}

LOG.info("request finished -> results ({})", commissions.size());

return commissions;

}

Using the service implemented to extract information from the website— for the same filters as those used in the previous captures from the browser— you can see that the same two results are obtained. Below, you can see the filters applied:

- Recipient of the email: “goldar@softtek.com”

- Date from: “2020-01-01”

- Date through: “2020-01-01”

- End users “F” (officials)

- Province code: “15” (A Coruña)

- Municipality code: “001” (A Coruña)

The request URL would be:

http://localhost:8080/jsoup/xunta/commission?recipient=hugo.goldar@softtek.com&startDate=2020-01-01&endDate=2020-01-01&finalUsers=F&provinceCode=15&district=001

The response (in JSON format) would be:

[

{

destinyCenter: "SERVIZO DE MOBILIDADE (A CORUÑA)",

jobCode: "IVC991000315001011",

denomination: "XEFATURA SECCIÓN II",

provision: "C",

group: "A1,A2,C1",

level: "25"

},

{

destinyCenter: "SERVIZO XURÍDICO-ADMINISTRATIVO (A CORUÑA)",

jobCode: "MRC991020115001005",

denomination: "XEFATURA SECCIÓN XURÍDICA",

provision: "C",

group: "A1,A2",

level: "25"

}

]

Email configuration

To send the notification by email, a Thymeleaf template and the Spring implementation of JavaMailSender were used, whose configuration can be found in the file “application.properties”.

For more information about Thymeleaf, you can read some of the posts already published on our blog (currently only available in Spanish): Thymeleaf as an MVC alternative, by Sebastián Castillo, and Thymeleaf and Flying Saucer to generate a PDF, by Emilio Alarcón.

# Configuración del correo.

spring.mail.host=smtp.gmail.com

spring.mail.port=587

spring.mail.username=tucorreo@gmail.com

spring.mail.password=secret

spring.mail.properties.mail.smtp.auth=true

spring.mail.properties.mail.smtp.connectiontimeout=5000

spring.mail.properties.mail.smtp.timeout=5000

spring.mail.properties.mail.smtp.writetimeout=5000

spring.mail.properties.mail.smtp.starttls.enable=trueWhen using Gmail, it’s necessary to enable access to less secure applications within account settings. Otherwise, an exception called “MailAuthenticationException” will be created.

org.springframework.mail.MailAuthenticationException:

Authentication failed; nested exception is javax.mail.AuthenticationFailedException:

Username and Password not accepted. Learn more at 535 5.7.8 https://support.google.com/mail/?p=BadCredentials

To enable this access, go to https://myaccount.google.com/lesssecureapps.

Conclusion

In this post, we briefly demonstrated how to easily extract information from a website with JSOUP, a Java library for web scraping, using its Connection, Document and Element as key elements, as well as being able to carry out simple filtering with the select interface that they provide us.

I encourage you to download the project code from the GitHub repository (https://github.com/hugogldrrl/java-jsoup) to try to debug and even analyze a different website from the one used in this project.

Please leave a comment if you have any suggestion or question… and subscribe to stay up to date 😉