Is Data Scraping one of the most demanded skills by companies?

The Internet generates millions of useful data every day. All of this data is recorded and stored, making the Internet an easily accessible hub that hosts an overwhelming volume of data, generated at immense speed with every passing moment. This data can be extracted to study recurring patterns and trends to assist in the deduction of useful insights and predictions.

When a large amount of information is aggregated in an organized manner, it can be used to help a company drive its business decisions. Of course, there is too much data online to do this manually and efficiently. That’s where Data Scraping comes in. This automation technique allows you to collect data in an organized manner quickly and efficiently.

How does Data Scraping work?

Data Scraping is the act of automating the process of extracting information from an unstructured data source such as websites, databases, applications, reviews, tables, images, and even audio sources, to restructure them and make them editable for machine learning systems. These systems then absorb the structured data, analyze it and provide intelligent information about it.

Once upon a time, data scraping was not a very popular skill and there was rarely any innovation or research that suggested ways to use such unstructured data. However, with the evolution of technology and especially of machine learning and data science in recent years, the Internet has become a mine of valuable data.

Scraping has become a crucial part of the big data industry as it provides access to information, such as contact details of potential customers, price data for price comparison websites and more, that can be used by business organizations. In 2019 there was a substantial growth in web scraping activities through which organizations sought to improve their operations. Therefore, the use of scraping has become a common technique for many companies, especially the larger ones such as Google.

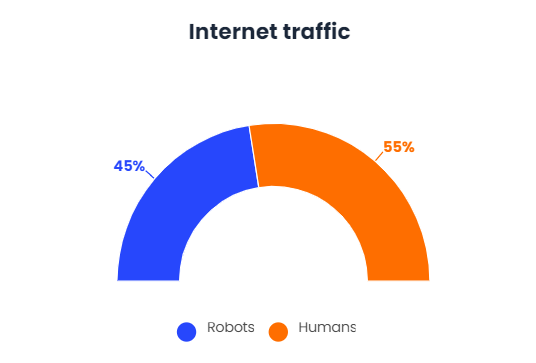

In fact, it is estimated that more than 45% of Internet traffic is done by robots and not by humans, and that 54 industries require Web Scraping specialists. The five main sectors that require these specialists include the industries: software, information technology and services, the financial sector, retail, and the marketing and advertising industry.

This should come as no surprise, since the relevance of the data has increased to such a high level in the last decade that industries are trying to prepare for possible future impacts and with as much data as possible. Data has become the golden key for any modern industry to achieve a secure and profitable future.

Advantages of web scraping

Web scraping offers several advantages, including the following:

- Faster: By handling large amounts of data which would take days or weeks to process through manual work, scraping can substantially reduce effort and increase decision speed.

- Reliable and consistent: manual scraping of data is very easy to lead to errors, e.g. typographical errors, forgotten information or information placed in the wrong columns. Automation of the scraping process ensures consistency and quality of data.

- It helps to reduce workloads.

- Less expensive: once implemented, the overall cost of data extraction is significantly reduced, especially when compared to manual work.

- Organized: The Scraping Expert can organize to scrape data on a regular basis or at specific times, for example when new data is available. In this way, the company ensures that it always has the latest data.

- Basic maintenance: Data scraping generally does not require much maintenance.

Disadvantages of web-scraping

While Web Scraping can provide a company with enormous benefits, there are also some disadvantages and assumptions on which it is based:

- Less complex websites: the more complex the website you want to scrape, the more difficult the scraping will be. The reasons are because setting up the scraper becomes more difficult, and maintenance costs may increase, because the expert is more likely to have errors and problems.

- Stable home page: Automated Web Scraping only makes sense if the target home page does not change its structure frequently. Each change of structure implies additional costs, because the scraping will need to be adjusted.

- Structured data: web-scraping will not work if you want to scrape data from 1000 different websites and each website has a completely different structure. There will need to be some basic structure that differs only in certain situations.

- Low protection: if data on the web is protected, scraping can also become a challenge and increase costs.

Importance of Data Scraping for Business

What gives a company a sustainable competitive advantage in the age of digitalization is data. Data is the main factor that will determine whether a company will be able to keep up with its competitors. The more data you have that your competitors cannot access, the greater the competitive advantage.

There is almost no area where data scraping has a profound influence. As data is increasingly becoming a primary resource for competition, data acquisition has also become especially important. Companies extract information from a website for several reasons, two of which are the most common: to grow the business by establishing a sales channel and to find out where competitors are setting their prices.

But web scraping can add much more value to a business in other ways. Here are some other reasons why a business, whether large or small, needs data scraping to make more money from its business:

- Marketing and sales: Web scraping can help to get potential customers, analyze people’s interests and monitor consumer sentiment by regularly extracting customer ratings from different platforms.

- Price comparison: One of the best ways to use data scraping technology is to collect price information. On the one hand, you can collect data for yourself to help you position a product against the competition, and on the other hand, to extract competitors’ prices by tracking their every move.

- Reputation and brand management: scraping is a good way to keep track of what people are saying about a company. Multiple reputation channels can be managed efficiently. In addition, it also helps to extract information about how often the company was mentioned on the Internet. That way, the company could identify any negative developments early on and avoid damaging the brand.

- Customer analysis: Scraping can help collect useful demographic information about customers, more effective advertising strategies can be created using that information, and customer behavior data can also be collected to understand the type of audience and choice of ads to be seen.

- Lead generation: data scraping is a very good tool for identifying potential customers. It can help you create your own lists based on what you know about your prospects, looking at data such as location, industry, previous purchases and more.

- Strategic concerns: With this technology you can find information to help companies with almost any strategic consideration possible. The key is to have the right set of tools to help get the job done in an organized and constructive manner. This part is also very useful, for example, in banking when it comes to investment decisions, as scraping can help detect risks and investment opportunities.

- Improving SEO activities: web scraping solves the problem of finding the right keywords by crawling the common keywords that have already been used. You can also scrape out the information of competitors to discover the keywords used by them. This way, one can use different and unique keywords to create a positive impact on the SEO strategy.

The Dark Side of Data Scraping

There are many positive uses for data scraping, but it is also abused by a small minority, and despite all that can be achieved with it there are some sectors that consider it an unethical tool.

GDPR requires companies to have a purpose for processing the data. In terms of data erasure, companies that cannot justify or establish a legitimate purpose should not perform data erasure. Naturally, a careful and considered documented analysis of the purpose is recommended, bearing in mind that individuals should reasonably expect their data to be processed for the identified purpose.

Purpose limitation means that companies should only collect and process personal data for specific, explicit and legitimate purposes and not engage in further processing unless it is compatible with the original purpose for which the data were deleted.

Many of the organizations face the challenge of how to address web scraping attacks in an efficient and scalable manner. The impact of this attack can be broad, ranging from excessive expenditure on infrastructure to devastating loss of intellectual property.

The most common misuse of data scraping is the collection of email. That is, using data scraping from websites, social networks and directories to get people’s email addresses, which are then sold to spammers or scammers.

In some jurisdictions, the use of automated means such as data scraping to collect e-mail addresses for commercial purposes is illegal, and is almost universally considered a bad marketing practice.

Another misuse is to extract data without the permission of the website owners. The two most common cases are price theft and content theft.

Conclusions

While data scraping may seem daunting, it doesn’t have to be. The benefits are enormous, and there is a good reason why all large companies use this technology to help them shape their business strategy. It’s cheap to get this data, but it’s incredibly valuable when you have it to work with.

Data scraping skills have definitely become one of the most sought after and coveted skills of the 21st century. It has become a highly recommended and needed tool since it only leads to adding value to the company.

However, its dark side should not be overlooked. Companies must understand the privacy risks associated with the practice, especially when establishing a legal basis for data scraping. Businesses should also ensure that a clear purpose is established for data scraping, that only data necessary for the purpose in question is scraped.