Google Chrome's new API: FLoC

Machine learning projects depend on access to large-scale datasets. Currently, common modelling processes have relied on collecting user data on central servers. This means using tracking techniques such as third-party cookies and fingerprinting, but in a world where privacy is becoming paramount, this approach has started to become a drawback.

The importance of the use and management of cookies has been growing, in parallel with users’ concern about how their data is handled, in relation to their privacy, which is increasing exponentially day by day, forcing browsers to gradually abandon this model.

Google Chrome presented Privacy Sandbox some time ago, but it was in January 2021 that it shared its progress on the road to eliminating third-party cookies and replacing them with new privacy alternatives. The proposal is Federated Learning of Cohorts (FLoC).

FLoC is part of Google’s Privacy Sandbox, the new initiative to build a privacy browser designed to create a web with advertising similar to the current one, but without third-party cookies. While maintaining a similar website, it will completely change the way advertising mechanisms work. Google is also expected to completely remove support for third-party cookies by 2022.

What is federated learning?

This Chrome API is based on Federated Learning, a distributed model training technique where the data stays with the user, i.e. client data is never transmitted to any central processor or server.

General models are trained without actually seeing the underlying data; the construction of these general models is carried out by using local models trained on personal datasets. In other words, this type of learning is a framework for building a collective model in which data is distributed among data owners without disclosure.

Thus, many browsers work together to form a centralised model without exchanging actual sample data. Google proposes that browsers create groups of users with similar interests based on URLs visited. This data would remain collected locally and would never leave the device, thus achieving the highest level of privacy.

Federated Learning of Cohorts (FLoC)

FLoC is an API that is part of the Privacy Sandbox experiments, dedicated to user privacy. It focuses on creating groups of users with similar browsing habits, the general principle of which is that individual clients cannot be distinguished within a group, as only the identifier of the group and not the individual is known.

This new technology uses a series of machine learning algorithms to create clusters within the browser, so that individual personalised advertising models are as good as those generated from third-party cookies, since it is assumed that all users in the same group have similar interests.

Most importantly, the information that feeds the algorithm is kept in the local browser and is not shared to any other server. The browser only exposes the generated cohort, so that it represents a certain group of people which, in conjunction with differential privacy, maximises anonymity.

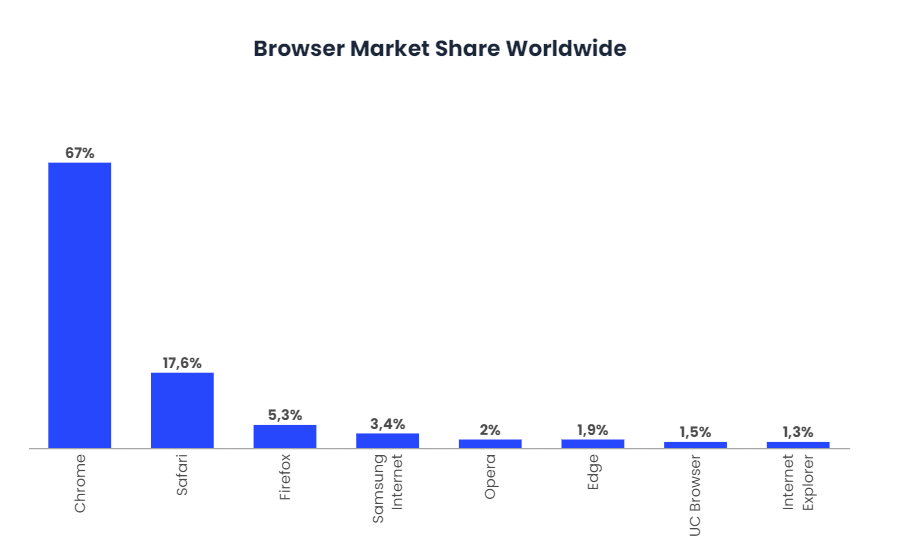

Disabling such an established method as third-party cookies is a laborious and complicated task. It is essential to consider three pillars, the user and their privacy, web publishers and advertisers. Google Chrome rules out removing cookies immediately, as did its rivals Sarafi (Apple), Firefox or Edge (Microsoft).

On the other hand, there are things like measurement and fraud prevention, which cannot be handled by FLoC, but new approaches like TURTLEDOVE, SPARROW or FLEDGE have been developed to address these uses. Also, FLoC is not without risk, as the machine learning algorithm could unintentionally construct groups of people that reveal sensitive categories such as race, sexuality or mental illness. Google continues to work on this problem by reconfiguring the algorithm or blocking these groups.

Additional privacy benefits

It should be known that Privacy Sandbox includes marketing approaches to create and deploy your audience to advertisers without the use of cookies. One such approach is FLEDGE, which stores information about bids and budgets for different campaigns on a trusted server. In addition, FLoC and FLEDGE not only explore privacy-preserving alternatives, but also help buyers decide how much to bid for ads.

Within Chrome’s privacy sandbox, a number of technologies have been proposed to measure the performance of ad campaigns without third-party cookies, thereby continuing to protect privacy while supporting advertisers’ requirements. These technologies are event-level and aggregate reporting, which allow models to recognise patterns in the data and provide accurate measurement of groups of users with related interests.

Google is also looking to evaluate how proposition conversion measurement APIs can be used in conjunction with its own measurement products. For example, implementing web tagging with a global tag or using Google Tag Manager.

Another important purpose of Privacy Sandbox is to protect individuals from tracking techniques by leaving their digital footprint. Gnatcatcger is Chrome’s new approach to masking IP addresses and protecting people’s identity without interfering with the normal operations of a website, thus preventing users from being identified without their consent.

Finally, the use of FLoC in conjunction with the Trust Token API will allow companies to distinguish real users from fraudulent ones without exposing people’s identities in the process. This will improve fraud detection on mobile devices while protecting the user.

First tests in 2021

The FLoC system will begin public testing in March 2021, with private advertisers to follow later in the year. This extended advertiser testing will provide Google with full feedback on the model.

So far, the simulation tests conducted have yielded positive results. Chrome teams claim that, by using interest-based audiences, FLoC can provide an effective replacement signal for third-party cookies.

It is a prerequisite for websites to use it to replace third-party cookies, but there are negative views on the data monopoly, as it may affect competition. In addition, the EFF believes it may lead to the targeting of vulnerable populations, but Google’s policy prohibits the display of personalised ads based on sensitive categories such as race, sexuality or that may cause psychiatric illness.